The basic question I’m trying to answer with this post is: Can a bunch of smartphone-wielding, normal humans give me useful information about what wines to buy? Vivino desperately wants the answer to be an unambiguous, “Yes!” Based on my experience, the most enthusiastic answer I could give is, “It’s better than nothing.” And the expert reviewers, to be fair, do give us nothing for the vast majority of wines.

First, a bit of background on Vivino. It is the most popular wine app in the world, with over 60 million app downloads, and over 15 million wines in its database. It is, from what I can tell, the most widely used wine app in the world. It is a privately held company, so not a lot of information is available publicly–like whether they are turning a profit, financials, deals, etc.

As a business, Vivino is hard to classify. It was founded to solve the fundamental problem wine poses to the consumer: nearly infinite product variety, with very little information to weigh the options. What other product in a typical supermarket has several hundred unique offerings, ranging in price from less than $5 to over $100? The average consumer has almost no chance to make a satisfying choice. Vivino wanted to solve that problem through crowd-sourced wine scores and reviews–like Yelp, but focused 100% on wine. That was a noble starting place.

But, you can also buy wines on the Vivino app. It is sort of like Amazon in its infancy, where you could order a product from the platform, but the order was usually fulfilled by another business. Amazon has clearly expanded beyond that humble beginning, and Vivino is moving in the same direction. But, the commissions it makes by referring app users to sellers and ad placements on the app are still the major sources of revenue.

Vivino was founded by tech entrepreneurs, so no surprise that data mining and algorithms are heavily used to customize and optimize everything you see on the app. How they actually do this is secret sauce, for sure, but the main data they have to work with is harvested from app users’ activity on the platform. Vivino definitely tracks what wines you buy, what wines you score, and what you scored them. They also track what wines you search for, and look at. I would bet a month’s salary it is all used to maximize the likelihood of you and me making an in-app purchase. Vivino also has a wine club, sending 6 bottles every 6 weeks, at varying price levels the subscriber chooses. The wines are “curated” by Vivino based on what they know about you. Truth in advertising: I have not purchased anything on the Vivino app, so what happens when you become an actual customer is unknown to me.

About Vivino Wine Scores…

Vivinos wine scores, plus their vast wine database, are absolutely the honey that attracts customers to the platform. I have a serious concern about a business that depends on me deciding to buy a wine from their app, but also claims to provide unbiased information about the quality of those same wines. Yelp at least puts a fig leaf over this obvious conflict by listing “sponsored results” (translation: paid placements) above the “all results” listing. Vivino does not do that. Did that 2018 El Enemigo Bonarda end up at the top of my Vivino app feed because it was the best value and fit, based my prior expressed preferences? Or because El Enemigo is paying 10 cents more than Catena Zapata for a referral from Vivino?

I also have serious concerns about the way scores and reviews are solicited in Vivino. My main concern is app users are presented with the current average score for a wine before they record a score. Here is the same question asked two ways:

- “On a scale of 1 to 5, with 1 being terrible and 5 being superb, what is your score for this wine?”

- “Everyone else thinks this wine is a 4.5 out of 5–what do you think it is?”

By presenting the current score for a wine to a new scorer, Vivino essentially poses the second question rather than the first. I pointed this out to Vivino, and requested from them data to evaluate this concern. Specifically, I requested they show app-user scores in sequential blocks of 50, with the average score and standard deviation for each block calculated. If the presenting the current score does not influence a new scorer, the standard deviation for each sequential block should be relatively constant, and the block average scores should bounce around according to sample error. If, on the other hand, presenting the current scores does influence the new scorers, the standard deviation for each sequential block would decline as more incoming scores align with the presented score, and the block means would start to collapse to the full sample average. Vivino’s reply to this request:

- “We truly appreciate you for coming up with this suggestion to try to prevent biased and influenced ratings by our users. This is indeed something we have yet to try on Vivino. We are always so grateful for any feedback from our great community. I have passed your idea on to our Dedicated Product Developers Team, I can’t promise that your idea will be implemented but I have passed on the inquiry along with your contact details so they would the have means of getting in touch with you if they decide to be open about the outcome. It is also essential since it is about ratings that determine the wine’s potential and qualities.”

Kudos to Vivino for replying substantively, rather than blowing me off. I have not heard back from the DPDT (love that it is capitalized!), but I also haven’t been de-platformed.

The concern about how the early scores affect later ones is not academic. Fake reviews have become an global industry, and the methods of influencing online ratings are limited only by the energy and imagination of fake reviewers. I believe it would be very easy for an enterprising wine marketer to put up a newly released wine on Vivino, and seed the aggregate score with 30 or so fake high scores. If the bias described above occurs, those initial scores could taint all later scores, even those from “real” app users. Again, nothing is shared on Vivino’s website to allay these concerns.

Other minor concerns are about sloppiness on Vivino’s part. For example, if you enter scores via the Vivino website using your browser, you input scores in 0.5 increments (i.e. you can score a wine 3.0 or 3.5, but not 3.3), using the classic “5-star” consumer rating rubric. On the smartphone app, scores are input by 0.1 increments, using a dial. Not a massive problem…but given how much Vivino pumps the value of their scores to attract users to the app, I thought they would have cleaned stuff like this up by now.

So with all this said, I went to my own notes on wines I have tasted and rated using my own simple system, to see if Vivino would qualify as a “trusted reviewer” for me (see Post #6 for more on that topic). I went through the same process of comparing my rating of a wine to Vivino, and assessing the level of concurrence. I also calculated the percentage of “high outlier” scores Vivino provided, compared to both Wine Spectator and Wine Enthusiast (see Post #5 for more on how I identified rates of outlier scores by reviewer). Vivino did not qualify as a trusted reviewer for me (see table below). I strongly concurred Vivino on only about half of the wines I tasted. The other half were either weak concurrence (one-third), or flat-out disagreement (one-in-ten). Also, about one-in-eight of the Vivino scores were “high outliers” compared to Wine Spectator and Wine Enthusiast, too–that’s a trust issue for me.

But…the fact that a Vivino scored almost every wine I tasted and scored myself (201!), and about half the time, I concurred with their score–that’s something, to me. A problem with expert reviewers is they don’t review every wine. Far from it. Expert reviewers only review a small percentage of all wines, and ABSOLUTELY miss a LOT of really good wines. So, if I didn’t have anything else to go on, definitely I’d look at Vivino. I might be one of those guys in the wine aisle looking at my smartphone…

…and Vivino Wine Reviews

Vivino is similar to Yelp in that a large cadre of app users offer up scores (almost always) and reviews of wines (less often). Hundreds of thousands of people have done so. Like Yelp, some reviewers are more prolific than others–some reviewers have contributed hundreds of reviews. I don’t know if Vivino has the same ecosystem of high-profile reviewers/influencers with cult-like followings that Yelp has. As part of this post, I looked at the actual qualitative reviews posted on Vivino, to see how useful those might be. Vivino groups what descriptors the user-reviewers are putting up for a wine, and provide them as “taste profiles” of the wines, in addition to the aggregate score (see below).

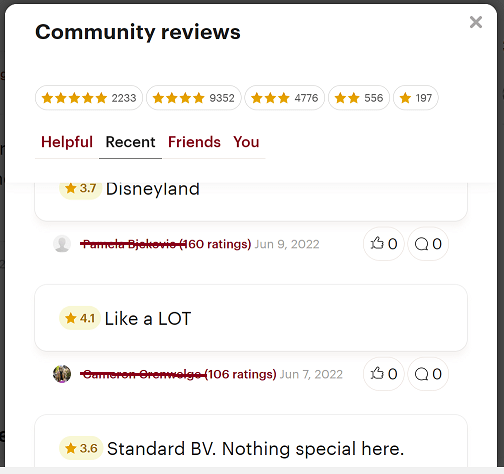

I have noticed with several wines the adjectives in reviews aligned with tasting notes on the labels–i.e. if “ripe cherry” is mentioned on the label, it frequently gets mentioned in reviews. Fair enough. But most of the reviews were just garbage (see example below). Some reviews were formulaic and uninteresting (e.g. it’s a zinfandel, “blackberry and notes of pepper” kind of thing). So reading them, in my opinion, is almost worthless. But I did develop the following curiosity:

- There are literally hundreds of thousands of Vivino reviewers–are there a handful out there I should follow? Let me know in comments if this would be of interest to you in a future post.